- A+

所属分类:Kubernetes

在第一章中,我们完成了集群的基础规划,并成功部署了Etcd数据库集群。

| 角色 | IP | 主机名 | 组件 |

| Master01 | 192.168.22.10 | k8s-master01 | kube-apiserver,kube-scheduler,kube-controller-manager,containerd,keepalived,haproxy |

| Master02 | 192.168.22.11 | k8s-master02 | kube-apiserver,kube-scheduler,kube-controller-manager,containerd,etcd,keepalived,haproxy |

| Node01 | 192.168.22.12 | k8s-node01 | kubelet,kube-proxy,containerd,etcd |

| Node02 | 192.168.22.13 | k8s-node02 | kubelet,kube-proxy,containerd,etcd |

部署在两台master节点

yum安装 keepalived 和 haproxy

yum -y install keepalived haproxy修改haproxy配置

mv /etc/haproxy/haproxy.cfg /etc/haproxy/haproxy.cfg-bak

vim /etc/haproxy/haproxy.cfg

global

maxconn 2000

ulimit-n 16384

log 127.0.0.1 local0 warning

stats timeout 30s

defaults

log global

mode http

option httplog

timeout connect 5s

timeout client 30s

timeout server 30s

timeout http-request 5s

timeout http-keep-alive 30s

frontend monitor-in

bind *:33305

mode http

option httplog

monitor-uri /monitor

frontend k8s-master

bind 0.0.0.0:16443

bind 127.0.0.1:16443

mode tcp

option tcplog

tcp-request inspect-delay 5s

default_backend k8s-master

backend k8s-master

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server k8s-master01 192.168.22.10:6443 check port 6443 inter 2000 rise 2 fall 3

server k8s-master02 192.168.22.11:6443 check port 6443 inter 2000 rise 2 fall 3k8s-master01配置keepalived

mv /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf-bak

vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id k8s-master01

script_user root

}

vrrp_script chkapiserver {

script "/etc/keepalived/checkapiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance xx1 {

state MASTER

interface ens33

mcast_src_ip 192.168.22.10

virtual_router_id 51

priority 100

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHAKAAUTH

}

virtual_ipaddress {

192.168.22.222

}

track_script {

chkapiserver

}

}k8s-master02配置keepalived

mv /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf-bak

vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id k8s-master02

script_user root

}

vrrp_script chkapiserver {

script "/etc/keepalived/checkapiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance xx2 {

state BACKUP

interface ens33

mcast_src_ip 192.168.22.11

virtual_router_id 51

priority 80

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHAKAAUTH

}

virtual_ipaddress {

192.168.22.222

}

track_script {

chkapiserver

}

}健康检测脚本,所有主节点都需要

vim /etc/keepalived/checkapiserver.sh

#!/bin/bash

# 定义一个计数器,用于记录检测失败的次数

fail_count=0

# 循环检查 3 次

for i in {1..3}; do

# 使用 pgrep 检查 haproxy 进程是否存在

if ! pgrep -x haproxy > /dev/null; then

# 如果没有找到 haproxy 进程,增加失败计数

fail_count=$((fail_count+1))

# 等待 1 秒后重试

sleep 1

else

# 如果找到了 haproxy 进程,退出循环

fail_count=0

break

fi

done

# 如果失败计数大于 0,说明haproxy服务可能停止了

if [ $fail_count -gt 0 ]; then

# 打印停止 Keepalived 的信息

echo "haproxy is not running, stopping keepalived"

# 停止 Keepalived 服务

systemctl stop keepalived

# 脚本退出,返回错误状态码 1

exit 1

fi

# 如果一切正常,脚本退出,返回成功状态码 0

exit 0 //保存退出

chmod +x /etc/keepalived/checkapiserver.sh启动 keepalived 与 haproxy

systemctl daemon-reload

systemctl enable --now haproxy.service

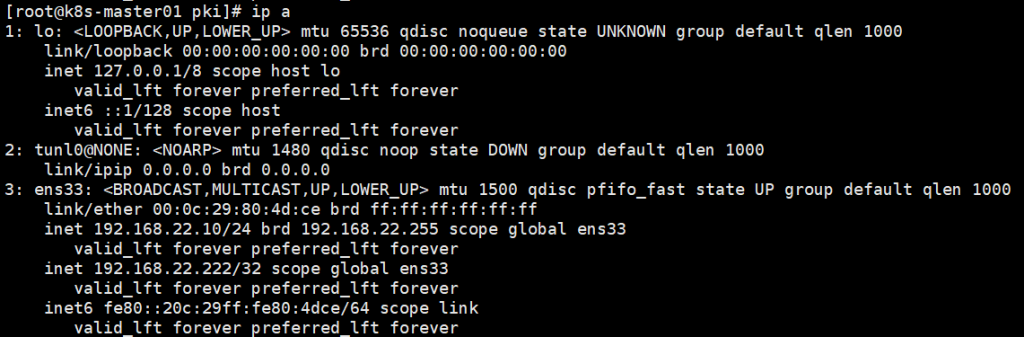

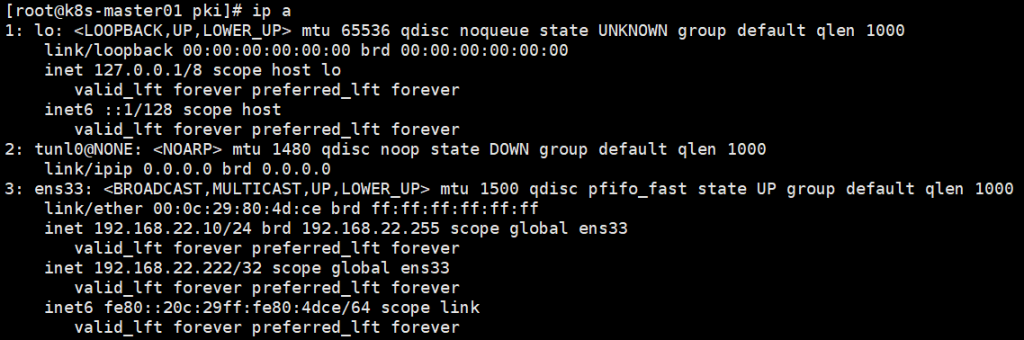

systemctl enable --now keepalived.servicek8s-master1主节点查看IP

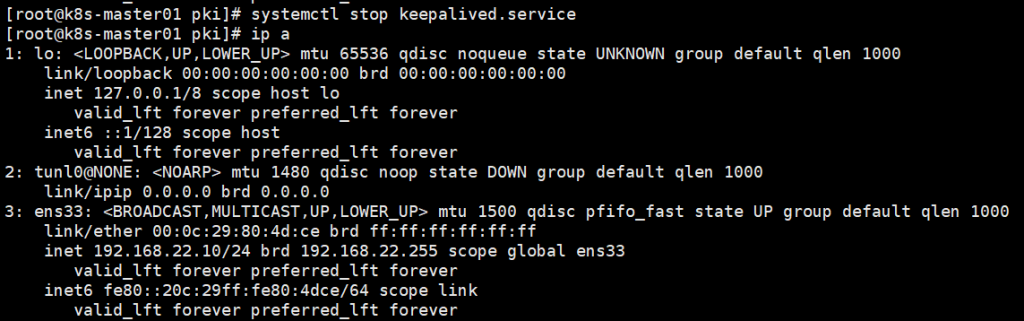

验证关闭k8s-master01节点

systemctl stop keepalived.service

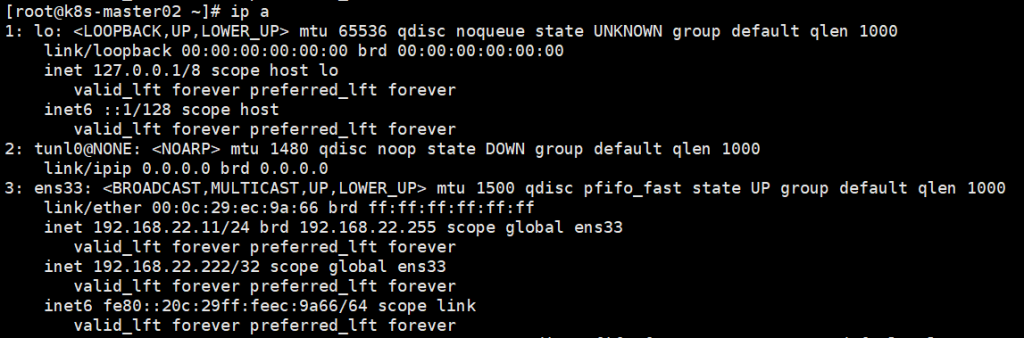

k8s-master02节点查看IP

启动k8s-master01节点

第三章配置Master管理监控节点和Node工作节点的核心组件