- A+

所属分类:Kubernetes

Kubernetes组件介绍

第一章,我们完成了集群的初步规划和Etcd数据库集群的部署工作。

第二章,我们实现了集群的高可用性能优化,通过部署keepalived和haproxy确保了服务的连续性和负载均衡。

接下来,我们将着手配置Master管理监控节点和Node工作节点的核心组件,进一步提升集群的性能和稳定性。

Master 节点组件:

- kube-apiserver: Kubernetes 的 API 服务器,处理所有请求,是集群资源的唯一入口;

- etcd: 分布式键值存储,保存集群配置和状态信息;

- kube-scheduler: 负责将 Pod 调度到合适的 Node 上;

- kube-controller-manager: 运行多个控制器,管理集群资源,确保实际状态与期望状态一致。

Node 节点组件:

- kubelet: 在每个 Node 上运行的代理,确保 Pod 的运行状态符合预期;

- kube-proxy: 负责实现 Service 的网络代理,维护网络规则;

- container runtime: 运行容器的软件,如 Docker 或 containerd。

| 角色 | IP | 主机名 | 组件 |

| Master01 | 192.168.22.10 | k8s-master01 | kube-apiserver,kube-scheduler,kube-controller-manager,containerd,keepalived,haproxy |

| Master02 | 192.168.22.11 | k8s-master02 | kube-apiserver,kube-scheduler,kube-controller-manager,containerd,etcd,keepalived,haproxy |

| Node01 | 192.168.22.12 | k8s-node01 | kubelet,kube-proxy,containerd,etcd |

| Node02 | 192.168.22.13 | k8s-node02 | kubelet,kube-proxy,containerd,etcd |

Master01节点部署kube-apiserver服务

生成kube-apiserver证书

cd /opt/kubernetes/pki/

vim /opt/kubernetes/pki/ca-config.json

{

"signing": {

"default": {

"expiry": "876000h"

},

"profiles": {

"kubernetes": {

"expiry": "876000h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}vim /opt/kubernetes/pki/ca-csr.json

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "Kubernetes",

"OU": "Kubernetes-manual"

}

],

"ca": {

"expiry": "876000h"

}

}vim /opt/kubernetes/pki/apiserver-csr.json

{

"CN": "kube-apiserver",

"hosts": [

"localhost",

"127.0.0.1",

"192.168.22.10",

"192.168.22.11",

"192.168.22.12",

"192.168.22.13",

"192.168.22.221",

"192.168.22.222",

"192.168.22.223",

"k8s-master01",

"k8s-master02",

"k8s-node01",

"k8s-node02",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "Kubernetes",

"OU": "Kubernetes-manual"

}

]

}cfssl gencert -initca ca-csr.json |cfssljson -bare ca //生成一个自签名的证书颁发机构(CA)证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes apiserver-csr.json |cfssljson -bare apiserver //为 Kubernetes API 服务器创建一个由该 CA 证书签名的证书

[root@k8s-master02 pki]# ls apiserver*.pem

apiserver-key.pem apiserver.pem生成apiserver聚合证书

vim /opt/kubernetes/pki/front-proxy-ca-csr.json

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"ca": {

"expiry": "876000h"

}

}vim /opt/kubernetes/pki/front-proxy-client-csr.json

{

"CN": "front-proxy-client",

"key": {

"algo": "rsa",

"size": 2048

}

}cfssl gencert -initca front-proxy-ca-csr.json |cfssljson -bare front-proxy-ca

cfssl gencert -ca=front-proxy-ca.pem -ca-key=front-proxy-ca-key.pem -config=ca-config.json -profile=kubernetes front-proxy-client-csr.json |cfssljson -bare front-proxy-client

[root@k8s-master01 pki]# ls front-proxy-ca*.pem

front-proxy-ca-key.pem front-proxy-ca.pem创建ServiceAccount Key——secret

cd /opt/kubernetes/pki/

openssl genrsa -out sa.key 2048

openssl rsa -in sa.key -pubout -out sa.pub设置开机自启动,并设置运行参数

vim /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/opt/kubernetes/bin/kube-apiserver \

--v=2 \

--allow-privileged=true \

--bind-address=0.0.0.0 \

--secure-port=6443 \

--advertise-address=192.168.22.10 \

--service-cluster-ip-range=10.96.0.0/12,fd00:1111::/112 \

--service-node-port-range=30000-32767 \

--etcd-servers=https://192.168.22.11:2379,https://192.168.22.12:2379,https://192.168.22.13:2379 \

--etcd-cafile=/opt/etcd/ssl/etcd-ca.pem \

--etcd-certfile=/opt/etcd/ssl/etcd.pem \

--etcd-keyfile=/opt/etcd/ssl/etcd-key.pem \

--client-ca-file=/opt/kubernetes/pki/ca.pem \

--tls-cert-file=/opt/kubernetes/pki/apiserver.pem \

--tls-private-key-file=/opt/kubernetes/pki/apiserver-key.pem \

--kubelet-client-certificate=/opt/kubernetes/pki/apiserver.pem \

--kubelet-client-key=/opt/kubernetes/pki/apiserver-key.pem \

--service-account-key-file=/opt/kubernetes/pki/sa.pub \

--service-account-signing-key-file=/opt/kubernetes/pki/sa.key \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--kubelet-preferred-address-types=Hostname,InternalDNS,InternalIP,ExternalDNS,ExternalIP \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \

--authorization-mode=Node,RBAC \

--enable-bootstrap-token-auth=true \

--requestheader-client-ca-file=/opt/kubernetes/pki/front-proxy-ca.pem \

--proxy-client-cert-file=/opt/kubernetes/pki/front-proxy-client.pem \

--proxy-client-key-file=/opt/kubernetes/pki/front-proxy-client-key.pem \

--requestheader-allowed-names=aggregator \

--requestheader-group-headers=X-Remote-Group \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-username-headers=X-Remote-User \

--enable-aggregator-routing=true \

--logging-format=json

Restart=on-failure

RestartSec=10s

LimitNOFILE=65535

[Install]

WantedBy=multi-user.targetsystemctl daemon-reload

systemctl enable --now kube-apiserver.serviceMaster01节点部署kube-controller-manager服务

生成controller-manager.kubeconfig证书

cd /opt/kubernetes/pki/

vim /opt/kubernetes/pki/manager-csr.json

{

"CN": "system:kube-controller-manager",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:kube-controller-manager",

"OU": "Kubernetes-manual"

}

]

}cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes manager-csr.json |cfssljson -bare controller-manager

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.22.222:6443 --kubeconfig=controller-manager.kubeconfig //设置集群参数,这里的IP地址写虚拟 IP 地址(VIP)

kubectl config set-credentials system:kube-controller-manager --client-certificate=controller-manager.pem --client-key=controller-manager-key.pem --embed-certs=true --kubeconfig=controller-manager.kubeconfig //设置客户端认证参数

kubectl config set-context system:kube-controller-manager@kubernetes --cluster=kubernetes --user=system:kube-controller-manager --kubeconfig=controller-manager.kubeconfig //设置上下文参数

kubectl config use-context system:kube-controller-manager@kubernetes --kubeconfig=controller-manager.kubeconfig //设置默认上下文

[root@k8s-master01 pki]# ls controller-manager*.pem

controller-manager-key.pem controller-manager.pem设置开机自启动,并设置运行参数

vim /usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/opt/kubernetes/bin/kube-controller-manager \

--v=2 \

--bind-address=0.0.0.0 \

--root-ca-file=/opt/kubernetes/pki/ca.pem \

--cluster-signing-cert-file=/opt/kubernetes/pki/ca.pem \

--cluster-signing-key-file=/opt/kubernetes/pki/ca-key.pem \

--service-account-private-key-file=/opt/kubernetes/pki/sa.key \

--kubeconfig=/opt/kubernetes/pki/controller-manager.kubeconfig \

--leader-elect=true \

--use-service-account-credentials=true \

--node-monitor-grace-period=40s \

--node-monitor-period=5s \

--controllers=*,bootstrapsigner,tokencleaner \

--allocate-node-cidrs=true \

--service-cluster-ip-range=10.96.0.0/12,fd00:1111::/112 \

--cluster-cidr=172.16.0.0/12,fc00:2222::/112 \

--node-cidr-mask-size-ipv4=24 \

--node-cidr-mask-size-ipv6=120 \

--requestheader-client-ca-file=/opt/kubernetes/pki/front-proxy-ca.pem

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.targetsystemctl daemon-reload

systemctl enable --now kube-controller-manager.serviceMaster01节点安装部署kube-scheduler服务

生成scheduler证书

cd /opt/kubernetes/pki/

vim /opt/kubernetes/pki/scheduler-csr.json

{

"CN": "system:kube-scheduler",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:kube-scheduler",

"OU": "Kubernetes-manual"

}

]

}cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes scheduler-csr.json |cfssljson -bare scheduler

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.22.222:6443 --kubeconfig=scheduler.kubeconfig //设置集群参数,这里的IP地址写虚拟 IP 地址(VIP)

kubectl config set-credentials system:kube-scheduler --client-certificate=scheduler.pem --client-key=scheduler-key.pem --embed-certs=true --kubeconfig=scheduler.kubeconfig //设置客户端认证参数

kubectl config set-context system:kube-scheduler@kubernetes --cluster=kubernetes --user=system:kube-scheduler --kubeconfig=scheduler.kubeconfig //设置上下文参数

kubectl config use-context system:kube-scheduler@kubernetes --kubeconfig=scheduler.kubeconfig //设置默认上下文

[root@k8s-master02 pki]# ls scheduler*.pem

scheduler-key.pem scheduler.pem设置开机自启动,并设置运行参数

vim /usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/opt/kubernetes/bin/kube-scheduler \

--v=2 \

--bind-address=0.0.0.0 \

--secure-port=10259 \

--leader-elect=true \

--kubeconfig=/opt/kubernetes/pki/scheduler.kubeconfig

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.targetsystemctl daemon-reload

systemctl enable --now kube-scheduler.service生成admin.kubeconfig证书

cd /opt/kubernetes/pki/

vim /opt/kubernetes/pki/admin-csr.json

{

"CN": "admin",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:masters",

"OU": "Kubernetes-manual"

}

]

}cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json |cfssljson -bare admin

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.22.222:6443 --kubeconfig=admin.kubeconfig //设置集群参数,这里的IP地址写虚拟 IP 地址(VIP)

kubectl config set-credentials kubernetes-admin --client-certificate=admin.pem --client-key=admin-key.pem --embed-certs=true --kubeconfig=admin.kubeconfig //设置客户端认证参数

kubectl config set-context kubernetes-admin@kubernetes --cluster=kubernetes --user=kubernetes-admin --kubeconfig=admin.kubeconfig //设置上下文参数

kubectl config use-context kubernetes-admin@kubernetes --kubeconfig=admin.kubeconfig //设置默认上下文

mkdir -pv /root/.kube

cp /opt/kubernetes/pki/admin.kubeconfig /root/.kube/config

[root@k8s-master01 pki]# ls admin*.pem

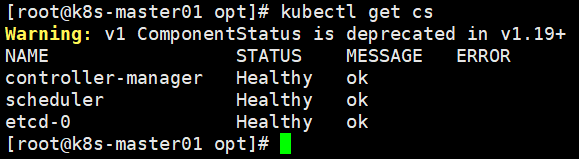

admin-key.pem admin.pemMaster01节点集群健康状态检查

kubectl get cs

Master01节点TLS Bootstrapping配置

cd /opt/kubernetes/pki/

vim /opt/kubernetes/pki/node-autoapprove-bootstrap-rbac.yaml

apiVersion: v1

kind: Secret

metadata:

name: bootstrap-token-rkl4d5

namespace: kube-system

type: bootstrap.kubernetes.io/token

stringData:

description: "The default bootstrap token generated by 'kubelet init'."

token-id: rkl4d5

token-secret: 1lyj3lmqrslv13yw

usage-bootstrap-authentication: "true"

usage-bootstrap-signing: "true"

auth-extra-groups: system:bootstrappers:default-node-token,system:bootstrappers:worker,system:bootstrappers:ingress

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubelet-bootstrap

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:node-bootstrapper

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:bootstrappers:default-node-token

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: node-autoapprove-bootstrap

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:nodeclient

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:bootstrappers:default-node-token

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: node-autoapprove-certificate-rotation

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:selfnodeclient

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:nodeskubectl get clusterroles|grep system:node-bootstrapper生成bootstrap-kubelet.kubeconfig文件

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.22.222:6443 --kubeconfig=bootstrap-kubelet.kubeconfig //设置集群参数

kubectl config set-credentials tls-bootstrap-token-user --token=rkl4d5.1lyj3lmqrslv13yw --kubeconfig=bootstrap-kubelet.kubeconfig //设置客户端认证参数

kubectl config set-context tls-bootstrap-token-user@kubernetes --cluster=kubernetes --user=tls-bootstrap-token-user --kubeconfig=bootstrap-kubelet.kubeconfig //设置上下文参数

kubectl config use-context tls-bootstrap-token-user@kubernetes --kubeconfig=bootstrap-kubelet.kubeconfig //设置默认上下文授权apiserver访问kubelet

cd /opt/kubernetes/pki/

vim /opt/kubernetes/pki/kube-apiserver-to-kubelet-rbac.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:kube-apiserver-to-kubelet

rules:

- apiGroups:

- ""

resources:

- nodes/proxy

- nodes/stats

- nodes/log

- nodes/spec

- nodes/metrics

- pods/log

verbs:

- "*"

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:kube-apiserver

namespace: ""

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:kube-apiserver-to-kubelet

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: kuberneteskubectl get clusterroles|grep system:kube-apiserverKubernetes工作节点相关组件安装部署

Master01节点安装部署kubelet服务

cd /opt/kubernetes/cfg/

vim /opt/kubernetes/cfg/kubelet-conf.yml

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

address: 0.0.0.0

port: 10250

readOnlyPort: 10255

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /opt/kubernetes/pki/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

cgroupDriver: systemd

cgroupsPerQOS: true

clusterDNS:

- 10.96.0.254

clusterDomain: cluster.local

containerLogMaxFiles: 5

containerLogMaxSize: 10Mi

contentType: application/vnd.kubernetes.protobuf

cpuCFSQuota: true

cpuManagerPolicy: none

cpuManagerReconcilePeriod: 10s

enableControllerAttachDetach: true

enableDebuggingHandlers: true

enforceNodeAllocatable:

- pods

eventBurst: 10

eventRecordQPS: 5

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

evictionPressureTransitionPeriod: 5m0s

failSwapOn: true

fileCheckFrequency: 20s

hairpinMode: promiscuous-bridge

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 20s

imageGCHighThresholdPercent: 85

imageGCLowThresholdPercent: 80

imageMinimumGCAge: 2m0s

iptablesDropBit: 15

iptablesMasqueradeBit: 14

kubeAPIBurst: 10

kubeAPIQPS: 5

makeIPTablesUtilChains: true

maxOpenFiles: 1000000

maxPods: 110

nodeStatusUpdateFrequency: 10s

oomScoreAdj: -999

podPidsLimit: -1

registryBurst: 10

registryPullQPS: 5

resolvConf: /etc/resolv.conf

rotateCertificates: true

runtimeRequestTimeout: 2m0s

serializeImagePulls: true

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 4h0m0s

syncFrequency: 1m0s

volumeStatsAggPeriod: 1m0svim /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=network-online.target firewalld.service containerd.service

Wants=network-online.target

Requires=containerd.service

[Service]

ExecStart=/opt/kubernetes/bin/kubelet \

--v=2 \

--bootstrap-kubeconfig=/opt/kubernetes/pki/bootstrap-kubelet.kubeconfig \

--kubeconfig=/opt/kubernetes/pki/kubelet.kubeconfig \

--config=/opt/kubernetes/cfg/kubelet-conf.yml \

--container-runtime-endpoint=unix:///run/containerd/containerd.sock \

--node-labels=node.kubernetes.io/node=

[Install]

WantedBy=multi-user.targetmkdir -pv /etc/kubernetes/manifests

systemctl daemon-reload

systemctl enable --now kubelet.serviceMaster01节点安装部署kube-proxy服务

生成kube-proxy.kubeconfig证书

cd /opt/kubernetes/pki/

vim /opt/kubernetes/pki/kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "system:kube-proxy",

"OU": "Kubernetes-manual"

}

]

}cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json |cfssljson -bare kube-proxy

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server="https://192.168.22.222:6443" --kubeconfig=kube-proxy.kubeconfig //设置集群参数

kubectl config set-credentials kube-proxy --client-certificate=kube-proxy.pem --client-key=kube-proxy-key.pem --embed-certs=true --kubeconfig=kube-proxy.kubeconfig //设置客户端认证参数

kubectl config set-context kube-proxy@kubernetes --cluster=kubernetes --user=kube-proxy --kubeconfig=kube-proxy.kubeconfig //设置上下文参数

kubectl config use-context kube-proxy@kubernetes --kubeconfig=kube-proxy.kubeconfig //设置默认上下文创建kube-proxy配置文件

vim /opt/kubernetes/cfg/kube-proxy.yaml

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0

clientConnection:

acceptContentTypes: ""

burst: 10

contentType: application/vnd.kubernetes.protobuf

kubeconfig: /opt/kubernetes/pki/kube-proxy.kubeconfig

qps: 5

clusterCIDR: 172.16.0.0/12,fc00:2222::/112

configSyncPeriod: 15m0s

conntrack:

#max: null

maxPerCore: 32768

min: 131072

tcpCloseWaitTimeout: 1h0m0s

tcpEstablishedTimeout: 24h0m0s

enableProfiling: false

healthzBindAddress: 0.0.0.0:10256

hostnameOverride: ""

iptables:

masqueradeAll: false

masqueradeBit: 14

minSyncPeriod: 0s

syncPeriod: 30s

ipvs:

#masqueradeAll: true

minSyncPeriod: 5s

scheduler: "rr"

syncPeriod: 30s

kind: KubeProxyConfiguration

metricsBindAddress: 127.0.0.1:10249

mode: "ipvs"

nodePortAddresses: null

oomScoreAdj: -999

portRange: ""

#udpIdleTimeout: 250msvim /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Kube Proxy

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/opt/kubernetes/bin/kube-proxy \

--v=2 \

--config=/opt/kubernetes/cfg/kube-proxy.yaml \

--cluster-cidr=172.16.0.0/12,fc00:2222::/112

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.targetsystemctl daemon-reload

systemctl enable --now kube-proxy.service打包Kubernetes目录复制到Master02节点、Node01和Node02节点

cd /opt/

tar -zcvf kubernetes.tar.gz ./kubernetes/ --exclude=./kubernetes/logs/*

scp kubernetes.tar.gz k8s-master02:/opt/

scp kubernetes.tar.gz k8s-node01:/opt/

scp kubernetes.tar.gz k8s-node02:/opt/master02启动管理节点相关组件

cd /opt/

tar xvf kubernetes.tar.gz

vim /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/opt/kubernetes/bin/kube-apiserver \

--v=2 \

--allow-privileged=true \

--bind-address=0.0.0.0 \

--secure-port=6443 \

--advertise-address=192.168.22.11 \

--service-cluster-ip-range=10.96.0.0/12,fd00:1111::/112 \

--service-node-port-range=30000-32767 \

--etcd-servers=https://192.168.22.11:2379,https://192.168.22.12:2379,https://192.168.22.13:2379 \

--etcd-cafile=/opt/etcd/ssl/etcd-ca.pem \

--etcd-certfile=/opt/etcd/ssl/etcd.pem \

--etcd-keyfile=/opt/etcd/ssl/etcd-key.pem \

--client-ca-file=/opt/kubernetes/pki/ca.pem \

--tls-cert-file=/opt/kubernetes/pki/apiserver.pem \

--tls-private-key-file=/opt/kubernetes/pki/apiserver-key.pem \

--kubelet-client-certificate=/opt/kubernetes/pki/apiserver.pem \

--kubelet-client-key=/opt/kubernetes/pki/apiserver-key.pem \

--service-account-key-file=/opt/kubernetes/pki/sa.pub \

--service-account-signing-key-file=/opt/kubernetes/pki/sa.key \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--kubelet-preferred-address-types=Hostname,InternalDNS,InternalIP,ExternalDNS,ExternalIP \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \

--authorization-mode=Node,RBAC \

--enable-bootstrap-token-auth=true \

--requestheader-client-ca-file=/opt/kubernetes/pki/front-proxy-ca.pem \

--proxy-client-cert-file=/opt/kubernetes/pki/front-proxy-client.pem \

--proxy-client-key-file=/opt/kubernetes/pki/front-proxy-client-key.pem \

--requestheader-allowed-names=aggregator \

--requestheader-group-headers=X-Remote-Group \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-username-headers=X-Remote-User \

--enable-aggregator-routing=true \

--logging-format=json

Restart=on-failure

RestartSec=10s

LimitNOFILE=65535

[Install]

WantedBy=multi-user.targetvim /usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/opt/kubernetes/bin/kube-controller-manager \

--v=2 \

--bind-address=0.0.0.0 \

--root-ca-file=/opt/kubernetes/pki/ca.pem \

--cluster-signing-cert-file=/opt/kubernetes/pki/ca.pem \

--cluster-signing-key-file=/opt/kubernetes/pki/ca-key.pem \

--service-account-private-key-file=/opt/kubernetes/pki/sa.key \

--kubeconfig=/opt/kubernetes/pki/controller-manager.kubeconfig \

--leader-elect=true \

--use-service-account-credentials=true \

--node-monitor-grace-period=40s \

--node-monitor-period=5s \

--controllers=*,bootstrapsigner,tokencleaner \

--allocate-node-cidrs=true \

--service-cluster-ip-range=10.96.0.0/12,fd00:1111::/112 \

--cluster-cidr=172.16.0.0/12,fc00:2222::/112 \

--node-cidr-mask-size-ipv4=24 \

--node-cidr-mask-size-ipv6=120 \

--requestheader-client-ca-file=/opt/kubernetes/pki/front-proxy-ca.pem

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.targetvim /usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/opt/kubernetes/bin/kube-scheduler \

--v=2 \

--bind-address=0.0.0.0 \

--secure-port=10259 \

--leader-elect=true \

--kubeconfig=/opt/kubernetes/pki/scheduler.kubeconfig

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.targetvim /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=network-online.target firewalld.service containerd.service

Wants=network-online.target

Requires=containerd.service

[Service]

ExecStart=/opt/kubernetes/bin/kubelet \

--v=2 \

--bootstrap-kubeconfig=/opt/kubernetes/pki/bootstrap-kubelet.kubeconfig \

--kubeconfig=/opt/kubernetes/pki/kubelet.kubeconfig \

--config=/opt/kubernetes/cfg/kubelet-conf.yml \

--container-runtime-endpoint=unix:///run/containerd/containerd.sock \

--node-labels=node.kubernetes.io/node=

[Install]

WantedBy=multi-user.targetvim /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Kube Proxy

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/opt/kubernetes/bin/kube-proxy \

--v=2 \

--config=/opt/kubernetes/cfg/kube-proxy.yaml \

--cluster-cidr=172.16.0.0/12,fc00:2222::/112

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target开机自启并启动

systemctl daemon-reload

systemctl enable --now kube-apiserver.service

systemctl enable --now kube-controller-manager.service

systemctl enable --now kube-scheduler.service

systemctl enable --now kubelet.service

systemctl enable --now kube-proxy.serviceNode01节点和Node02节点安装部署kubelet服务和kube-proxy服务

cd /opt/

tar xvf kubernetes.tar.gz

vim /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=network-online.target firewalld.service containerd.service

Wants=network-online.target

Requires=containerd.service

[Service]

ExecStart=/opt/kubernetes/bin/kubelet \

--v=2 \

--bootstrap-kubeconfig=/opt/kubernetes/pki/bootstrap-kubelet.kubeconfig \

--kubeconfig=/opt/kubernetes/pki/kubelet.kubeconfig \

--config=/opt/kubernetes/cfg/kubelet-conf.yml \

--container-runtime-endpoint=unix:///run/containerd/containerd.sock \

--node-labels=node.kubernetes.io/node=

[Install]

WantedBy=multi-user.targetmkdir -pv /etc/kubernetes/manifests

systemctl daemon-reload

systemctl enable --now kubelet.servicevim /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Kube Proxy

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/opt/kubernetes/bin/kube-proxy \

--v=2 \

--config=/opt/kubernetes/cfg/kube-proxy.yaml \

--cluster-cidr=172.16.0.0/12,fc00:2222::/112

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

systemctl daemon-reload

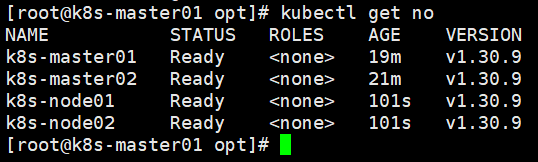

systemctl enable --now kube-proxy.serviceMaster01节点验证集群

kubectl get no

NAME STATUS ROLES AGE VERSION

k8s-master01 Ready <none> 19m v1.30.9

k8s-master02 Ready <none> 21m v1.30.9

k8s-node01 Ready <none> 101s v1.30.9

k8s-node02 Ready <none> 101s v1.30.9